Signing a massive AI contract could be your biggest mistake of 2024

Like you, I’ve been reading the news about how telcos are inking huge deals for generative artificial intelligence (GenAI) with large language model (LLM) vendors. Here are some examples of what I’m talking about:

- SK Telecom’s $100 million investment in Anthropic as part of its efforts to develop a customized LLM for the telco industry;

- T-Mobile paying OpenAI $100 million over three years to use the startup’s GenAI technology;

- Vodafone committing more than $1 billion to Google for 10 years of GenAI and cloud services; and

- KT Corporation signing a multi-billion-dollar check to Microsoft to accelerate AI innovation in Korea.

I bet you’re wondering: should my telco be doing this, too?

I’m sure all the big LLM vendors have been knocking on your door, trying to get you to sign a big enterprise deal. But I’m going to go out on a limb and tell you: you may want to pump the brakes on these big AI commitments for a hot minute. You’re going to want to keep your options open while the GenAI pricing landscape works its way through a Wild West of rapidly changing costs, technological leaps, and shifting market dynamics. Locking into a multi-year deal right now almost 100% guarantees buyer’s remorse: you’ll be committing your organization to something you’ll be overpaying for right out of the gate—giving you so many credits that you can’t possibly use them all in the time allotted. Here’s why you might want to slow your roll.

The CapEx AI spree is your friend

I read this interesting article from Hemant Mohapatra last week, AI—The Last Employee?, about how we are still in the “loading phase” of AI:

“The entire landscape is wide open and there is a land-grab going on. Companies such as Google, Microsoft, Meta, Nvidia, OpenAI, Anthropic etc are on a CAPEX spree. If you think of the “AI Token” as a commodity resource over time — similar to a CPU/GPU cycle, or an oil droplet — it makes sense why there is a CAPEX spree. Commodity categories work only with economies of scale, so the largest players tend to get larger. Price is the core differentiator as technical gaps diminishes over time. This is already happening in the LLM category. The flag to plant is some version of “cost per token” and whoever plants it can claim this land and then seek perpetual rent on whoever builds cities on it… What is the size of the prize that’s making folks like Larry Page say internally at Google “I am willing to go bankrupt rather than lose this race”?“

Let that paragraph sink in for a moment. If AI tokens1 are commodities as Mohapatra suggests, then this is a price war WHERE YOU ARE THE WINNER. At the moment, every tech giant and tons of LLM startups are racing to establish themselves as leaders and capture market share in these early days of GenAI. These guys want you to “build your city” on top of their land, and they’re willing to give it to you for nearly FREE to get you to start building now. Pricing is going to be in great flux while they fight it out. Use this loading phase to get AI implemented inside your organization without significant upfront investment and capitalize on their aggressive offerings to get ahead of your competition.

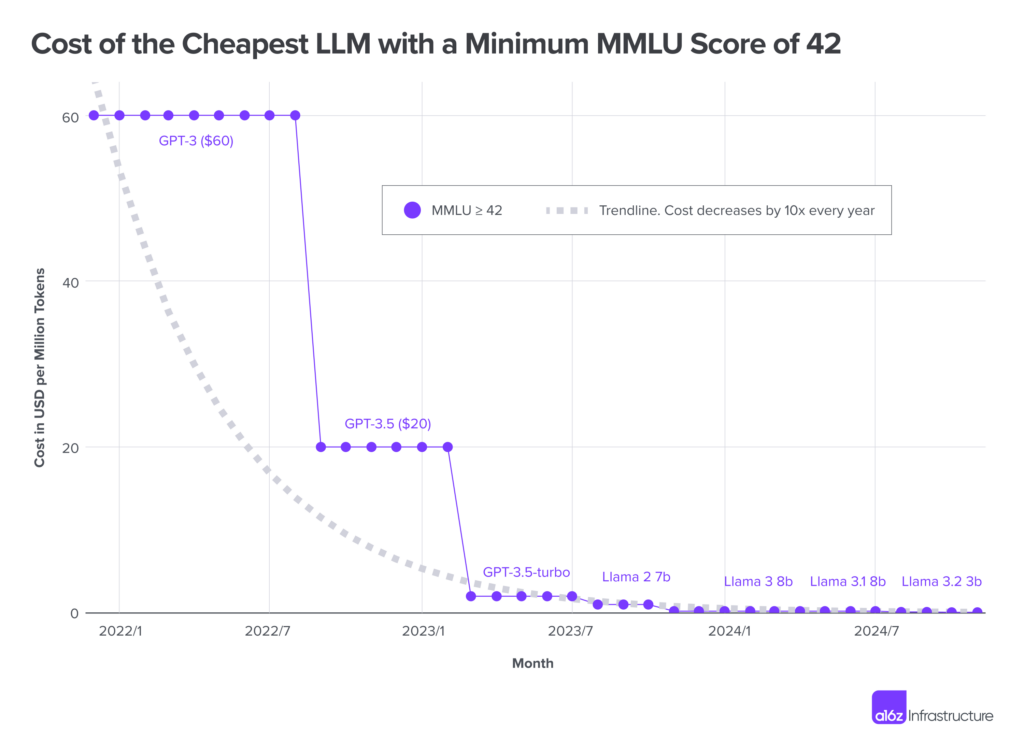

AI prices are dropping 10x year over year

What costs a fortune in AI spending now will be dirt cheap tomorrow. As you can see from the chart above, the prices for the LLMs that power GenAI are dropping by 10x year over year. You do not want to be the guy who decided to pay $100M for something that will cost $10K four years from now. Even if the prices fluctuate between now and then, the cost is going to be a lot lower than the amount you’re committing to in 2024.

But here’s the real kicker: it’s not just about overpaying. It’s about opportunity cost. While you’re locked into yesterday’s technology at yesterday’s prices, your competitors will be free to leverage whatever breakthrough (and price drop) comes next.

Stay nimble—avoid multi-year contracts

Need another reason to avoid locking in to today’s high prices? The boatloads of credits that you’ll struggle to use. I’m talking about having 100x credits to burn down as prices drop and you get more AI API calls for money. OUCH! Instead of locking in money with one AI vendor, play the field! Keep your contracts short, or better yet, don’t sign a contract at all.

Here’s some more advice:

- Base contracts on actual, not predicted usage: Opt for short-term contracts based on your actual AI API usage to accurately predict needs. Sign contracts with other vendors to test different models, and make sure your organization is using the credits. Vendors will always allow you to increase your commitment; they won’t let you shrink it.

- Pilot like a pro: Test all the models: ChatGPT, Claude, Gemini, Llama. Somebody launches an update? Try it out within 24 hours. Hear about a new startup with a new approach? Get on it. Experiment with everything. Build your applications in a way that allows PORTIONS of the app to use different models for different capabilities. Every model has different strengths and weaknesses. Use them all while we are exploring the jagged edge of the AI frontier.

- Keep an eye on the price: Monitor the market continuously. The AI pricing landscape is a war the big guys are fighting to win, and undercutting each other along the way. Don’t lock in; ride the prices all the way down.

Once you’re confident that you know what your options are and what you need, and once prices have stabilized a bit, THEN lock in your rates.

Making AI work for your telco today

While the big players duke it out in their pricing war, smart telcos aren’t sitting idle. They’re taking advantage of this unique moment in tech history to experiment, learn, and build capabilities—without the golden handcuffs of massive contracts.

At Totogi, we get it. That’s why our AI-powered solutions like BSS Magic are built to be LLM model-agnostic and flexible. We can help you harness the best AI capabilities for your specific BSS modification needs while keeping you nimble enough to take advantage of falling AI prices and improving technology. Our approach lets you:

- Start small and scale based on actual usage, not projected numbers

- Switch between different AI models as price and performance evolve

- Build real-world experience without massive upfront commitments

Remember: The goal isn’t to win the race to sign the biggest AI contract—it’s to win the race to actually deliver AI-powered value to your customers. And in today’s rapidly evolving AI landscape, that means staying flexible and smart about your commitments as you ride the price curve down while building real capabilities.

Ready to start your AI journey the smart way? Let’s talk.

1 Anthropic and OpenAI offer usage-based pricing and charge slightly different rates for input and output “tokens,” which are chunks of text that can be as small as one character or as long as a full word. (In English, one token is about four characters, or ¾ of a word on average.) Google Vertex AI charges per character, the same price for input and output. Amazon Bedrock charges per use, with different rates for its different foundation models. With all these tools, the more you use them, the more you pay.

Recent Posts

Get my FREE insider newsletter, delivered every two weeks, with curated content to help telco execs across the globe move to the public cloud.

Get my FREE insider newsletter, delivered every two weeks, with curated content to help telco execs across the globe move to the public cloud.

Get Started

Contact Totogi today to start your cloud and AI journey and achieve up to 80% lower TCO and 20% higher ARPU.

Explore

Anthropic shares the latest on what’s happening with AI in telco, and explains why it’s critical for operators to prepare today.

Engage

Set up a meeting with the Totogi team to learn how to replace 80% of your spend on BSS modifications, regardless of your BSS vendor.

Try

Sign up for a pilot of Totogi’s BSS Magic to find out how you can transform spoken ideas into a robust, custom BSS—no engineering expertise required!