Yes, you can vibe code a telco app

I was talking to the CIO of an APAC Tier-1 telco recently, and she told me it’s impossible to build a telco app by vibe coding, which is to say by using large language models (LLMs).

“Don’t talk to me about that,” she said.

Sorry to burst your bubble, but I AM going to talk about it. It is possible, and it’ll very soon be way better than hand coding.

But before I do, I want to take you back to May 5, 1954. No one had ever run a mile in under four minutes. Physiologists said it was impossible: the human body couldn’t handle the strain, the heart would give out, the lungs would collapse. It was accepted wisdom, backed by science and reinforced by failure after failure.

And then on May 6, Roger Bannister did it: three minutes, fifty-nine point four seconds.

Then something remarkable happened: within 46 days, John Landy broke Bannister’s record. Within three years, 16 runners had broken the four-minute barrier. The only thing that changed was belief.

Once people saw what was possible, the mental barrier crumbled. Something similar is about to happen in telco software development. The formula is simple: powerful LLMs + sophisticated tools + telco-specific context = production-ready code. The first two are ready. The third is what Totogi built. The fourth—belief—is the only thing holding you back.

Who says you can’t?

Don’t just take my word for it. Listen to the leaders of the world’s most successful tech companies.

- Gary Tan, President and CEO of Y-Combinator, recently said about vibe coding, “This isn’t a fad. This isn’t going away. It’s actually the dominant way to code and if you’re not doing it you might just be left behind.”

- Microsoft CEO Satya Nadella says as much as 30% of Microsoft code is now written by artificial intelligence, a figure he expects to grow.

- On Google’s Q3 2024 earnings call a year ago, CEO Sundar Pichai said, “AI systems now generate a quarter of new code for the company’s products.” It’s almost certainly more than that now.

These aren’t futurists making predictions. These are executives reporting what’s already happening in production at scale. And the reason it’s working? The underlying models are getting exponentially better.

But it’s your future that’s really at stake. The companies embracing AI-assisted development aren’t just moving faster; they’re building compounding advantages. With every sprint, their AI learns more about their domain. With every feature, their developers get better at working with AI. The gap between early adopters and wait-and-see companies won’t be measured in months. It’ll be measured in generations of capability. By the time you catch up to where the leaders are now, they’ll be solving problems you haven’t even dreamed of.

The models are ready

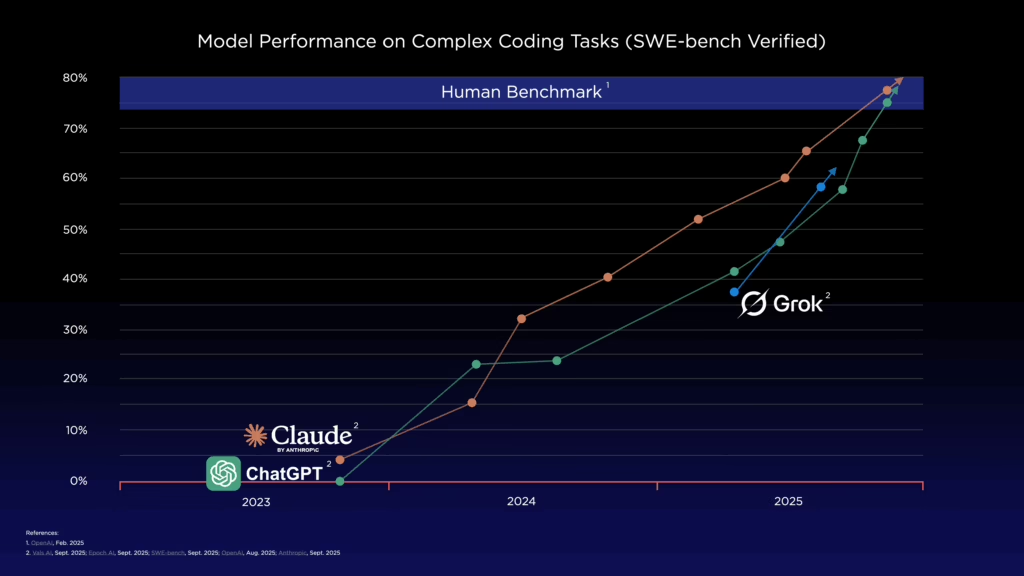

Released in the last couple months, Anthropic’s Claude Sonnet 4.5 and OpenAI’s GPT-5 are showing reasoning capabilities that would’ve seemed like science fiction just two years ago. Sonnet 4.5 scored 77.2% on SWE-bench Verified, a benchmark that tests real-world software engineering tasks. GPT-5 scored 74.9%. Human performance on the same tests is 77-80%.

For context: a year ago, previous versions of these same models scored in the low 40s. In 2023, they were in the mid-30s. This is exponential growth, not incremental improvement. These models can now understand complex system architectures, debug multi-threaded applications, and handle the intricate business logic that makes telco applications so gnarly.

“But our systems ARE uniquely complex,” you’re thinking. “You don’t understand our business logic, our integration requirements, our reliability standards.”

Here’s what you’re missing: telco is the GOOD kind of complex for AI. Your charging logic isn’t unknowable—it’s documented in the 3GPP specifications. Your integration patterns aren’t mystical—they’re standardized by TM Forum and MEF. Your network orchestration follows CAMARA and well-defined APIs.

Telco has decades of accumulated, documented, systematized knowledge. And that’s exactly what LLMs excel at: pattern recognition in well-documented domains. The same AI that’s rebuilding trading platforms in financial services and modernizing patient systems in healthcare can absolutely handle your charging logic and subscriber management.

Your complexity isn’t a barrier. It’s a solved problem waiting to happen.

The AI coding tools are ready

It’s not just the LLMs getting better, but the AI coding tools, too. These integrated development environments (IDEs) aren’t the glorified autocomplete tools your team tested six months ago. Today’s AI-assisted IDEs are like having a senior developer pair-programming with you 24/7—one who never gets tired or frustrated and has read every piece of telco documentation ever written. For example, Cursor can understand your entire codebase and suggest changes spanning multiple files while maintaining architectural consistency. Replit’s Agent 3 builds entire applications autonomously, testing and debugging its own code in continuous loops. GitHub Copilot with GPT-5 spins up entire project structures from natural language descriptions. Microsoft’s Azure AI Foundry offers an integrated platform where you can build and deploy AI applications instantly with infrastructure already connected.

Even the “extreme skeptics” among us are so impressed that they’re designing benchmark tests to track the tools’ abilities and progress.

For our industry, the real game-changer is when these tools get trained on telco-specific code and documentation. Imagine an AI assistant that understands 3GPP standards, knows the difference between a packet data protocol (PDP) context for 2G/3G data sessions and a packet data network (PDN) connection for LTE/5G, and can write charging functions that handle edge cases your junior developers would miss. Way beyond generic code generation, this will be domain-aware AI that understands the quirks of diameter protocols and the complexities of real-time billing.

AI doesn’t replace your developers. It enables your organization to do exponentially more with the same resources. Fix all the bugs instead of a quarter of them. Ship features next quarter instead of next year. Finally modernize that legacy codebase instead of limping along with code from 2008.

Context engineering is the last piece you need

Here’s the key: the best models and tools in the world are only as good as the context you give them. Context engineering—feeding the right information to LLMs in the right way—is what transforms general-purpose AI into a domain expert that can build production-ready telco software.

Would you ask a brilliant developer to build your charging system without showing them your architecture, your data models, or your business rules? Of course not. Yet that’s exactly what most companies are doing with AI—throwing generic tools at domain-specific problems and wondering why the results are mediocre.

The breakthrough comes from structured context—turning your unstructured telco knowledge into a format AI can actually work with. And that requires building knowledge graphs that transform raw data into structured context for AI systems. It’s sophisticated work that requires deep expertise in both AI engineering and knowledge representation.

Totogi, where I’m acting CEO, is pioneering this approach for telecom. While legacy BSS vendors are trying to add AI features on top of decades-old architectures, we built BSS Magic as AI-native from day one. Context engineering isn’t a feature; it’s the foundation. Our BSS Magic platform creates a telco-specific ontology—a structured knowledge graph that understands your subscribers, products, services, business rules, and how they all relate to each other. It’s not just documentation. It’s your business logic and data relationships mapped in a way AI can reason about.

Telco applications are actually perfect for this approach. They’re complex in predictable ways. Business logic is well-documented. Integration patterns are standardized. Error handling requirements are clearly defined. You’re not making it up as you go; telco has decades of accumulated knowledge that can be systematically organized into structured context.

BSS Magic is the bridge between your business knowledge and your development tools. When your developers (or AI coding assistants) need to build a new feature, BSS Magic provides the context: “Here’s how subscriber data flows. Here’s how rating works. Here’s how this product relates to that service. Here are the edge cases you need to handle.”

Our team combines deep telco domain expertise with cutting-edge AI engineering. We understand both the intricacies of BSS systems AND how to structure that knowledge for AI consumption. That combination is rare, and it’s what makes BSS Magic work.

The result is AI that doesn’t just generate syntactically correct code, but code that understands your business.

With powerful LLMs, sophisticated tools, and BSS Magic providing telco-specific structured context, you have everything you need to build production-ready applications with AI assistance today.

The only thing missing is belief that it’s possible.

This is our four-minute mile moment

Being a vibe-coding skeptic today is like being one of those pre-1954 physiologists insisting no human could run a mile in under four minutes. But the Bannister moment is here. At Totogi, we’re already building production-ready telco applications using AI-assisted development. We’re not waiting for perfect tools—we’re making today’s tools work. We’re not fighting the technology—we’re embracing it and riding the wave of innovation.

And we’re going to prove it at TelecomTV’s AI-Native Telco Forum in Düsseldorf on October 23-24. We’ll show you exactly what’s possible when you combine powerful LLMs, sophisticated tools, and BSS Magic’s context engineering.

Not vaporware. Not roadmap promises. Just a live showing of what AI-assisted development can do for telco.

Will you be running with the leaders or watching from the sidelines while your competitors lap you? The models are ready. The tools are ready. Your people need to catch up.

If you’re tired of people telling you it’s impossible to vibe code a telco app, join us in Düsseldorf.

It’s time to break the four-minute mile. Will you be there when it happens?

Recent Posts

Get my FREE insider newsletter, delivered every two weeks, with curated content to help telco execs across the globe move to the public cloud.

Get my FREE insider newsletter, delivered every two weeks, with curated content to help telco execs across the globe move to the public cloud.

Get started

Contact Totogi today to start your cloud and AI journey and achieve up to 80% lower TCO and 20% higher ARPU.

Transform

Michael Walker, who leads enterprise AI deployment strategy at Totogi, talks about our approach: vertical AI, built for telcos, that understands your business from day one.

Engage

Set up a meeting to learn how the Totogi BSS Magic platform can unify and supercharge your legacy systems, kickstarting your AI-first transition.

Explore

Visit our BSS Magic page to learn more about what this AI-driven product can do and how it works. Our demos let you see it in action.